KubeSphere, an open-source, distributed operating system with Kubernetes as its kernel, helps you manage cloud-native applications on a GUI container platform. TiDB is an open-source, cloud-native database that runs smoothly on Kubernetes.

In my last blog post, I talked about how to deploy TiDB on KubeSphere. If you want TiDB to be available to tenants across the workspace, you can release the TiDB app to the KubeSphere public repository, also known as the KubeSphere App Store. In this way, all tenants can easily deploy TiDB in their project, without having to repeat the same steps.

In this article, I will walk you through how to deploy TiDB on KubeSphere by app templates and release TiDB to the App Store.

Prerequisites

Before you try the steps in this post, make sure:

- You have prepared the environment with KubeSphere installed.

- You have enabled the KubeSphere App Store.

Prepare TiDB Helm Charts

To deploy TiDB on KubeSphere, you need TiDB Helm charts. Helm helps you create, install, and manage Kubernetes applications. A Helm chart contains files that describe the necessary collection of Kubernetes resources. In this section, I’ll demonstrate how to download the required Helm charts and upload them to KubeSphere.

Download TiDB Helm Charts

- Install Helm. Based on your operating system and tools, there are several ways to install Helm. For detailed information, see the Helm documentation. The simplest to install Helm is to execute the following command directly:

{{< copyable “shell-regular” >}}

curl https://raw.githubusercontent.com/helm/helm/master/scripts/get-helm-3 | bash - Add the PingCAP TiDB Helm repository:

{{< copyable “shell-regular” >}}

helm repo add pingcap https://charts.pingcap.org/ - View all the Helm charts in this repository:

$ helm search repo pingcap --version=v1.1.6

NAME CHART VERSION APP VERSION DESCRIPTION

pingcap/tidb-backup v1.1.6 A Helm chart for TiDB Backup or Restore

pingcap/tidb-cluster v1.1.6 A Helm chart for TiDB Cluster

pingcap/tidb-drainer v1.1.6 A Helm chart for TiDB Binlog drainer.

pingcap/tidb-lightning v1.1.6 A Helm chart for TiDB Lightning

pingcap/tidb-operator v1.1.6 v1.1.6 tidb-operator Helm chart for Kubernetes

pingcap/tikv-importer release-1.1 A Helm chart for TiKV ImporterNote that in this article, I use v1.1.6 charts. You can also get the latest version released by PingCAP.

- Download the charts you need. For example:

{{< copyable “shell-regular” >}}

helm pull pingcap/tidb-operator --version=v1.1.6 &&

helm pull pingcap/tidb-cluster --version=v1.1.6 - Make sure they have been successfully pulled:

$ ls | grep tidb

tidb-cluster-v1.1.6.tgz

tidb-operator-v1.1.6.tgz

Upload Helm charts to KubeSphere

Now that you have Helm charts ready, you can upload them to KubeSphere as app templates.

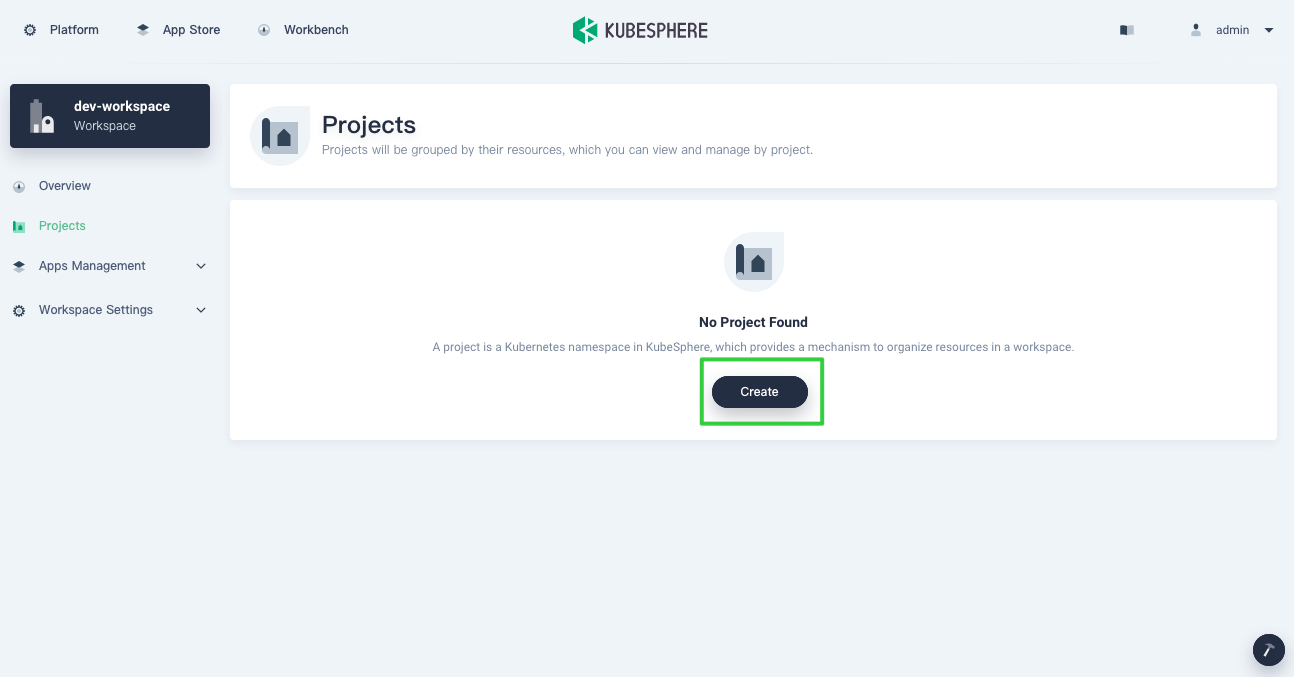

- Log in to the KubeSphere web console and create a workspace.

- In the upper left corner of the current page, click Platform to display the Access Control page.

- In Workspaces, click Create to create a new workspace and give it a name; for example,

dev-workspaceas shown below.

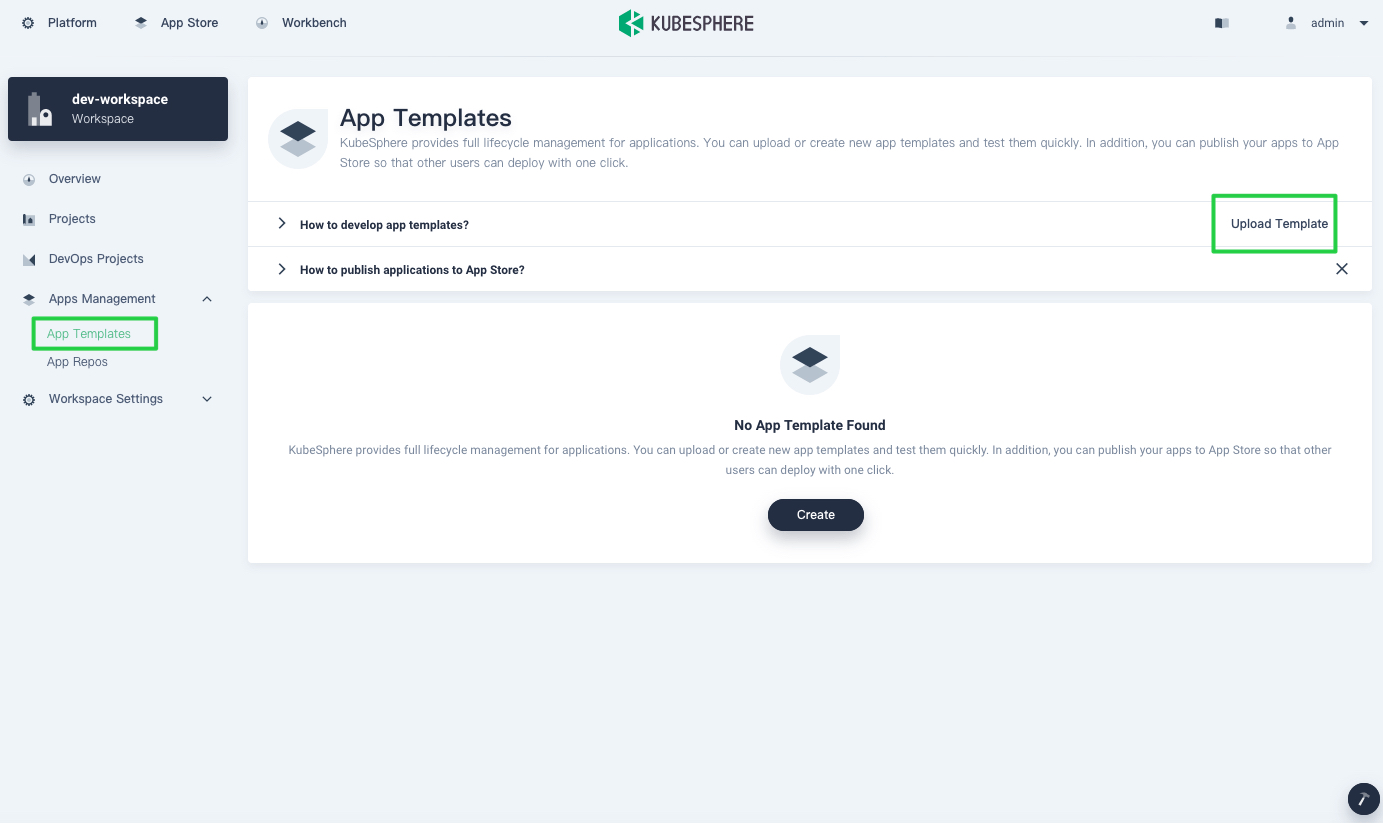

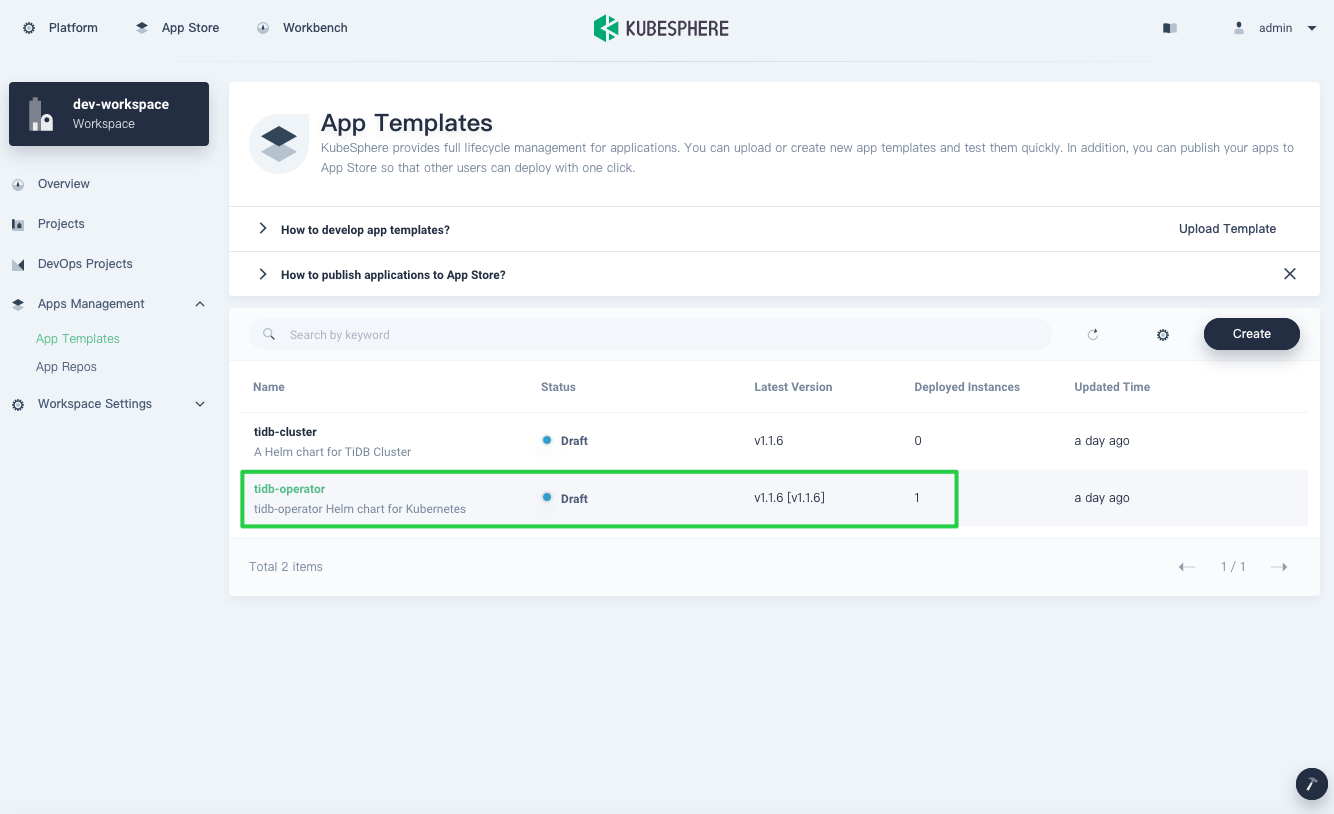

- Go to your workspace. From the navigation bar, select App Templates, and, on the right, click Upload Template.

My last blog post explained how to deploy TiDB using an app repository. This time, let’s upload Helm charts as app templates.

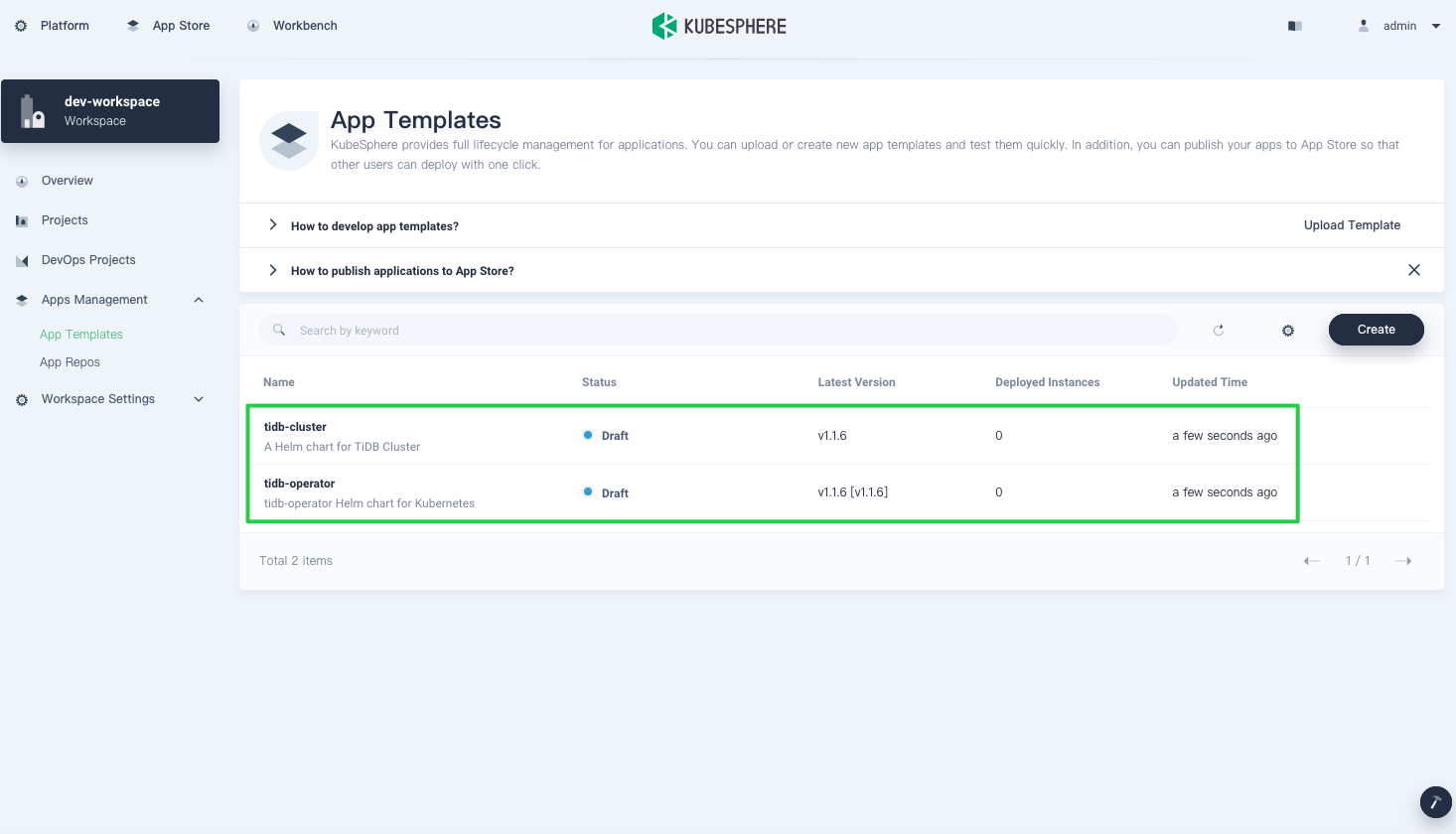

- Select the Helm charts you want to upload to KubeSphere. After they are successfully uploaded, they appear in the list below.

Deploy TiDB Operator and a TiDB cluster

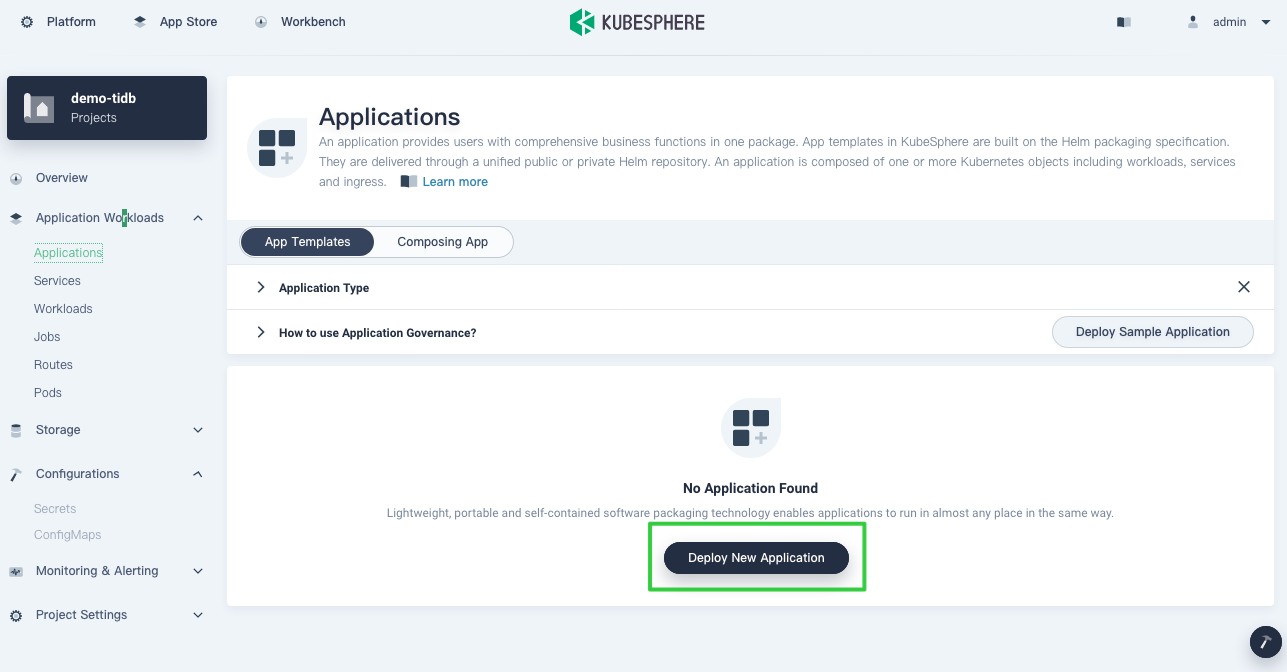

- To deploy TiDB, you need to create a project (also known as a namespace) where all workloads of an app run.

- After you create the project, navigate to Applications and click Deploy New Application.

- In the Deploy New Application dialog box, select From App Templates.

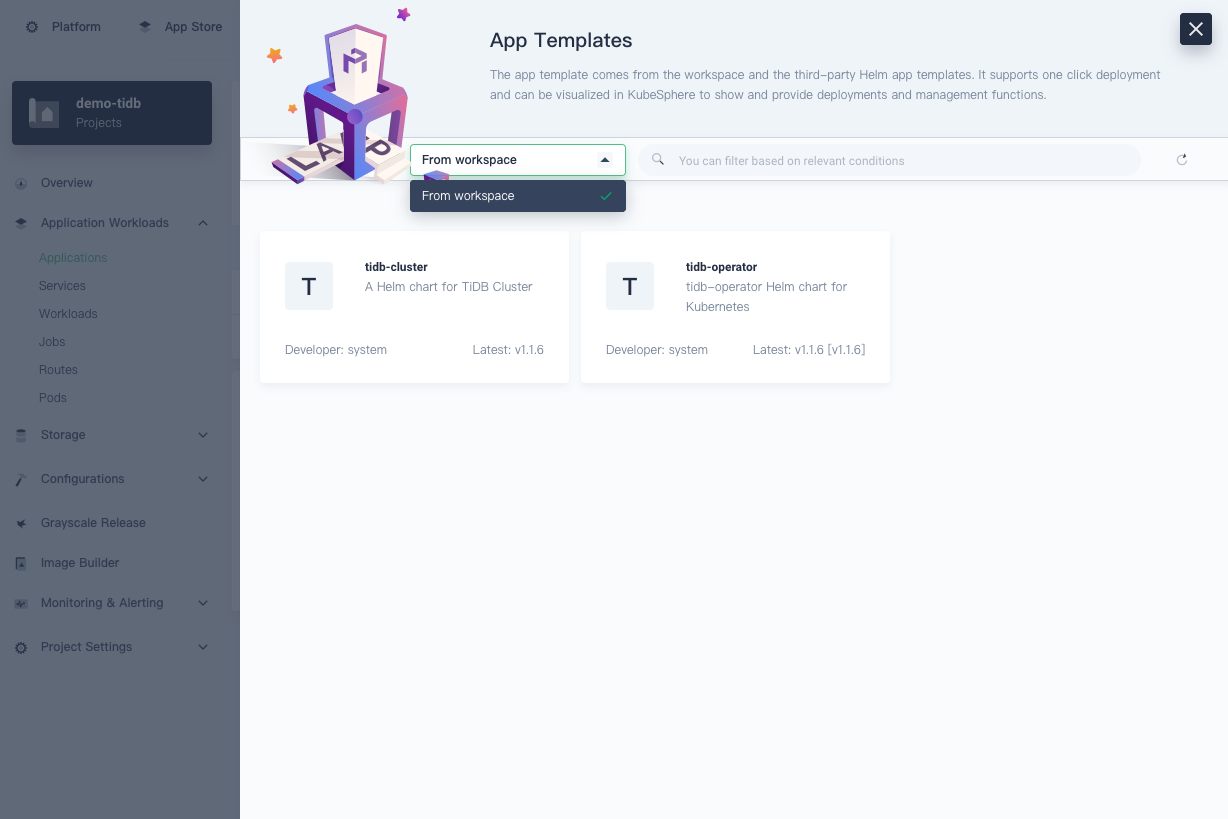

- Deploy TiDB Operator and the TiDB cluster. In the drop-down list, select From workspace and click tidb-cluster and tidb-operator respectively to deploy them. For more information about how to configure them, see my last post.

All Helm charts uploaded individually as app templates appear on the From workspace page. If you add an app repository to KubeSphere to provide app templates, they display in other repositories in the drop-down list.

Release TiDB to the App Store

App templates enable you to deploy and manage apps in a visual way. Internally, they play an important role as shared resources. Enterprises create these resources—which include databases, middleware, and operating systems—for the coordination and cooperation within teams.

You can release apps you have uploaded to KubeSphere to the public repository, also known as the App Store. In this way, all tenants on the platform can deploy these apps if they have the necessary permissions, regardless of the workspace they belong to.

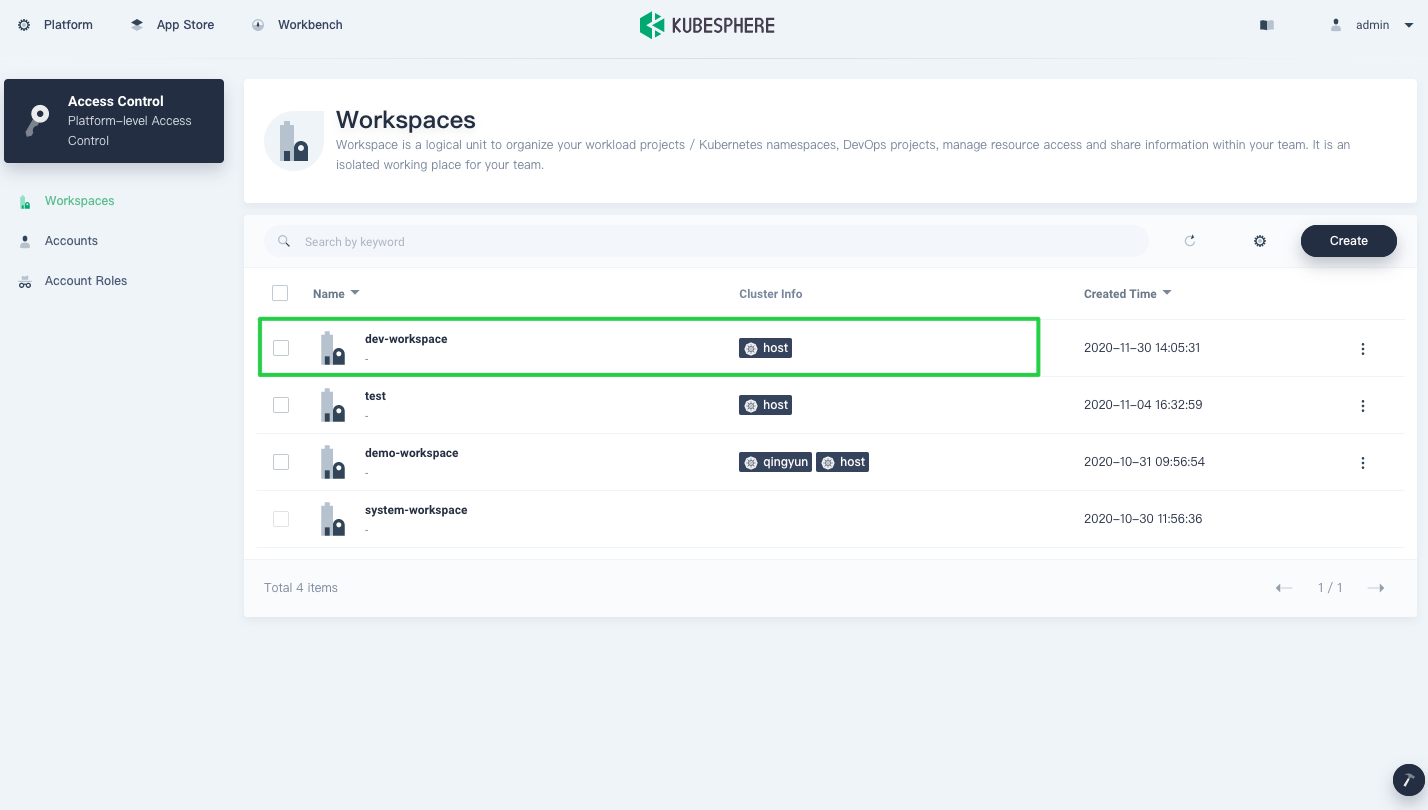

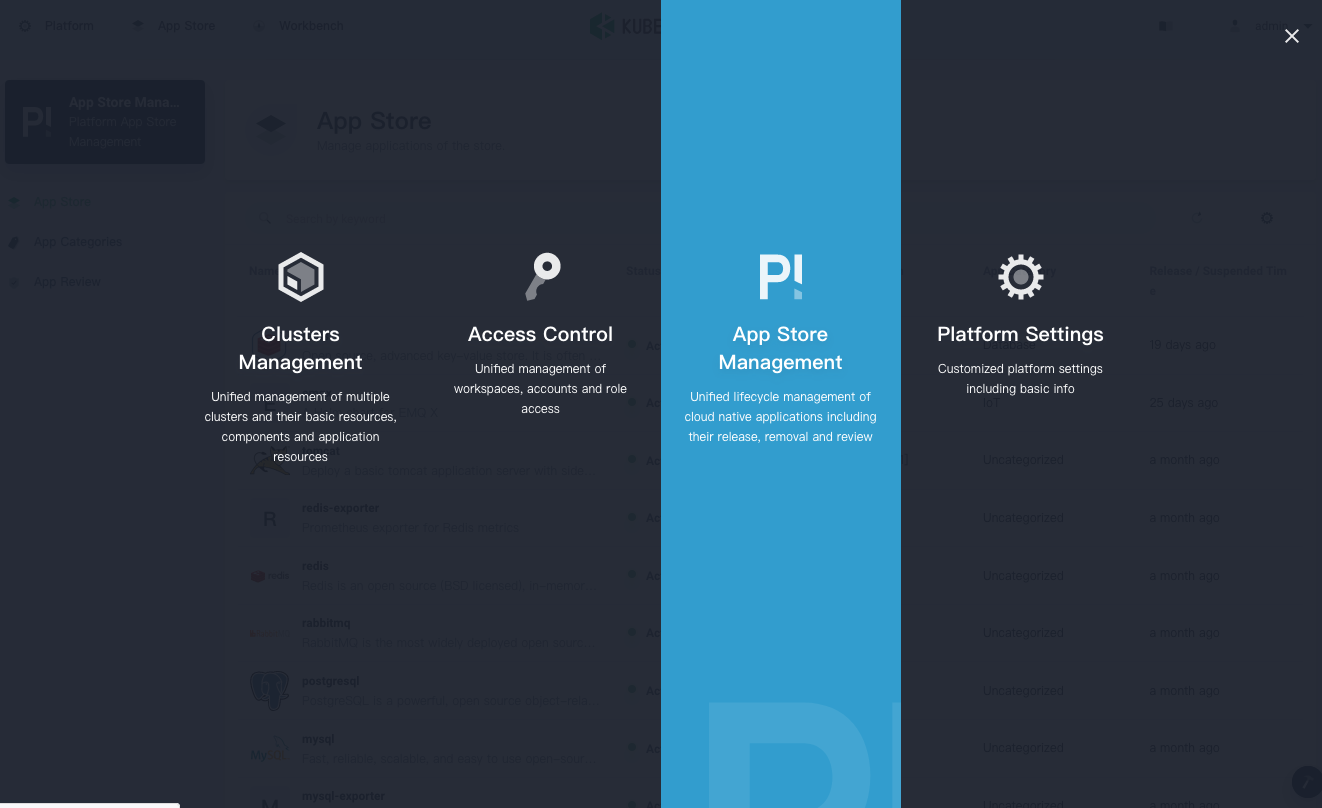

- In the top left corner, click Platform and select Access Control.

- On the Workspaces page, click the workspace where you uploaded the Helm charts.

- From the navigation bar, click App Templates, and you can see the uploaded apps. To release it to the App Store, click

tidb-operator.

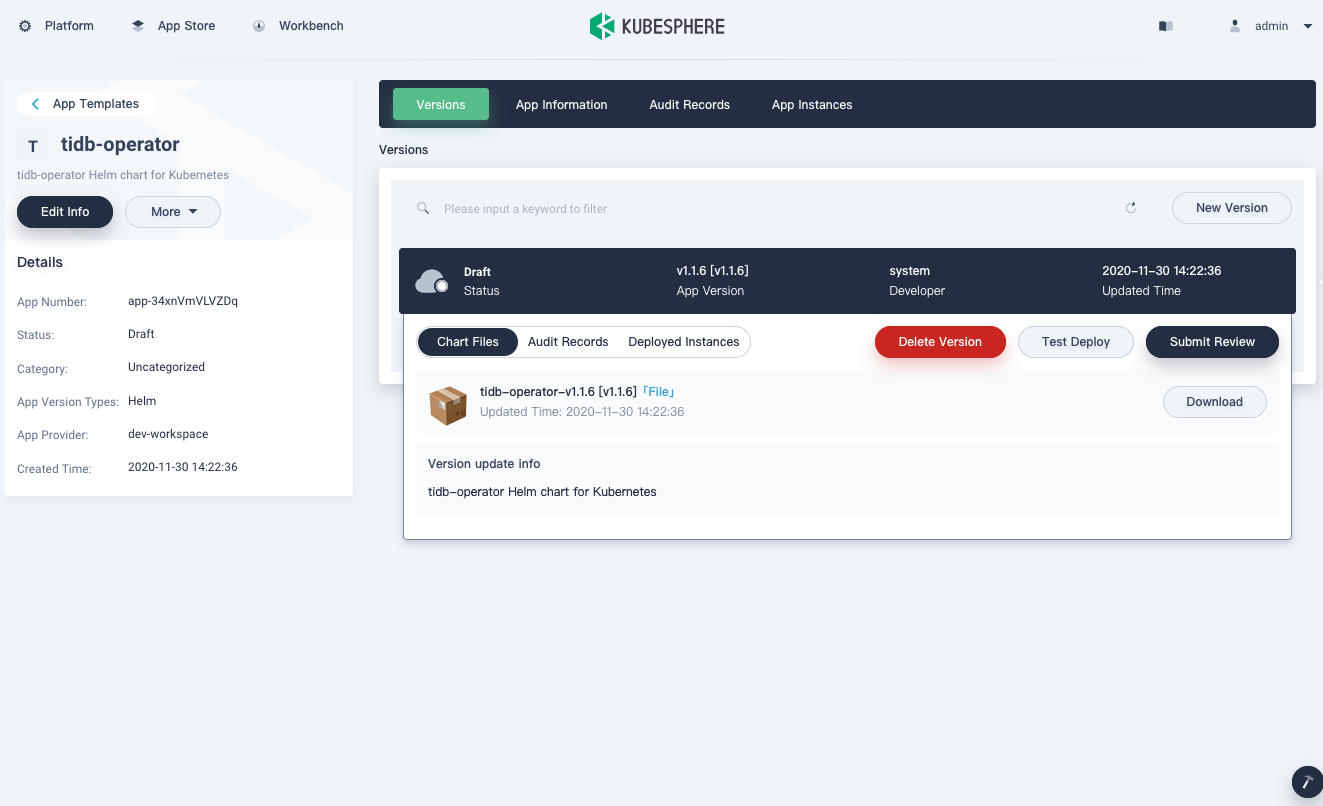

- On the Versions page, click the version number to expand the menu and click Submit Review.

KubeSphere allows you to manage an app across its entire lifecycle, including deleting the version, testing the deployment, or submitting the App for review. For an enterprise, this is very useful when different tenants need to be isolated from each other and are only responsible for their own part of the life cycle as they manage an app version.

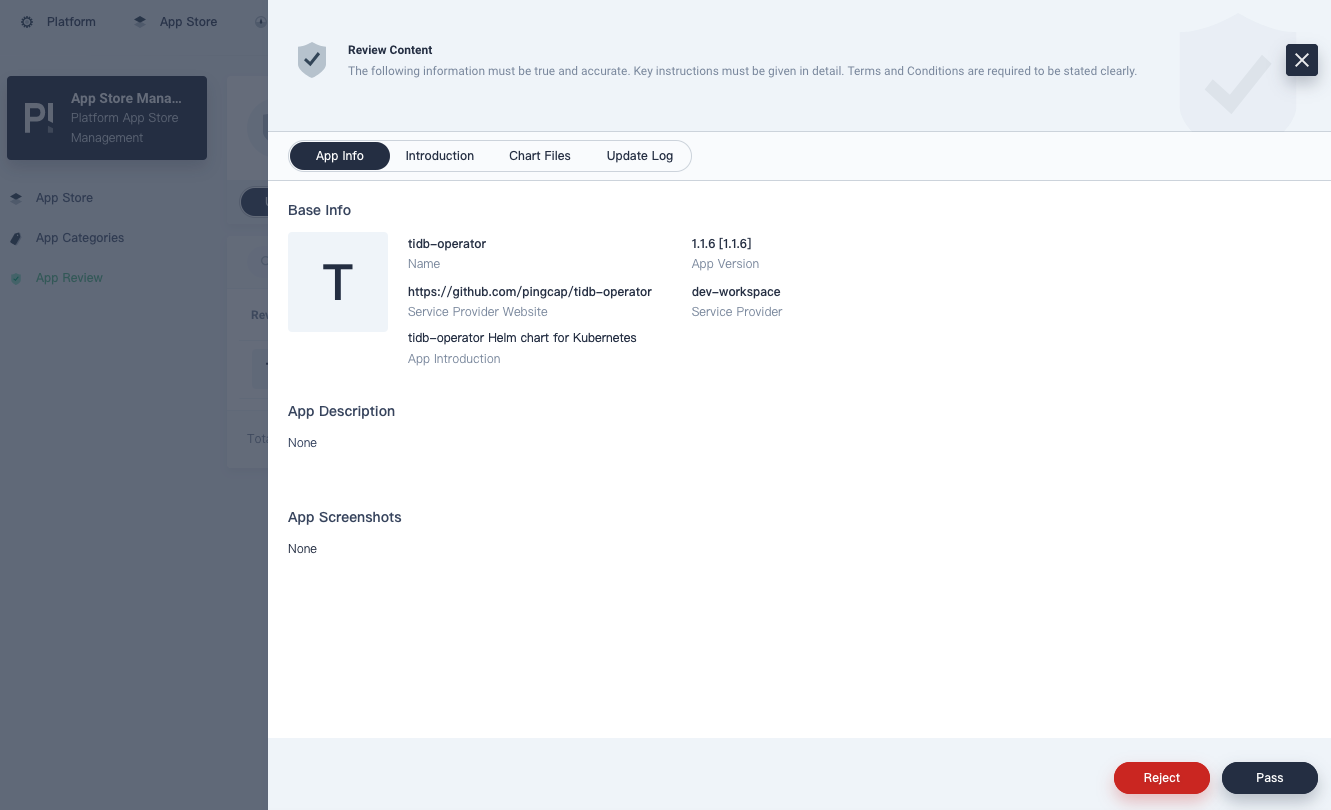

- Approve the app submitted for review. In the top left corner, click Platform and select App Store Management.

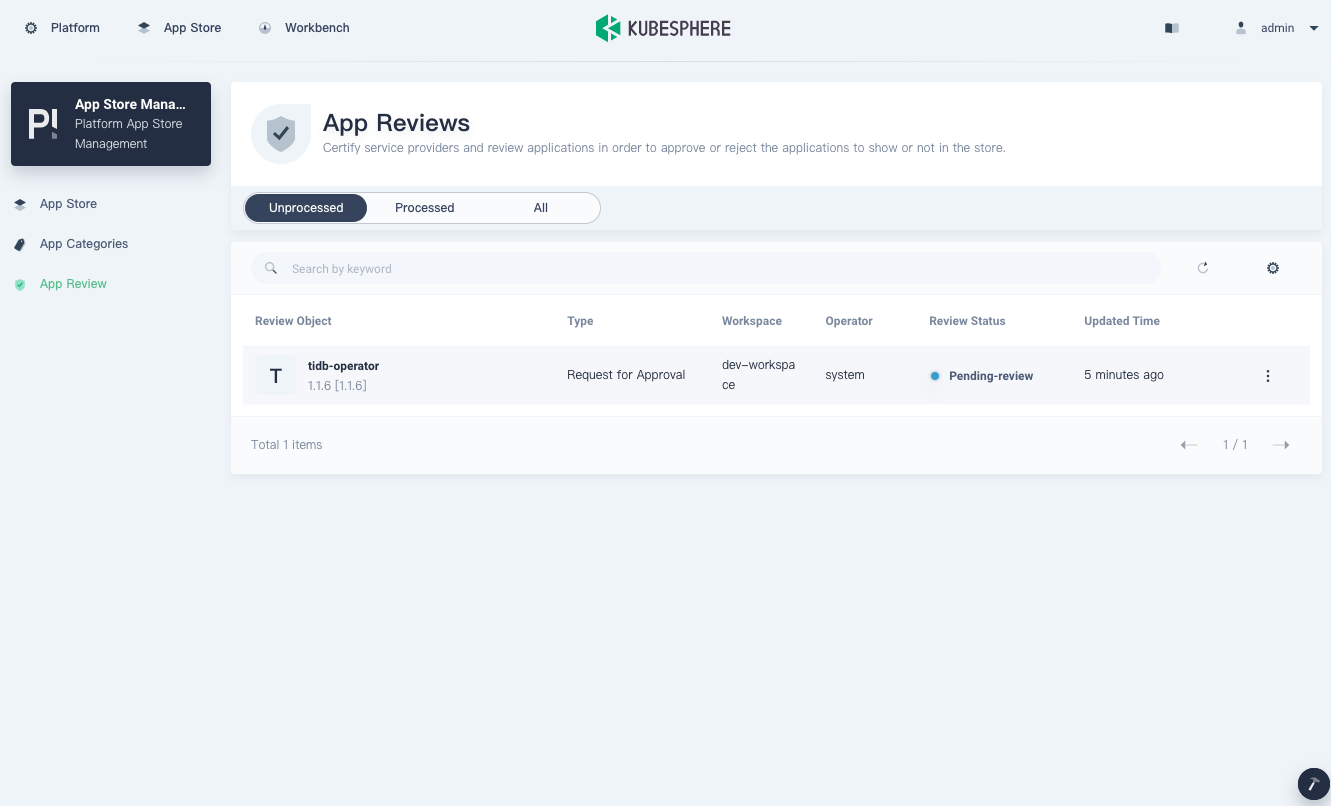

- In the App Reviews page, click the app you just submitted.

- In the App Info page that appears, review the app information and chart files. To approve the app, click Pass.

- After the app is approved, you can release it to the App Store. In the top left corner, click Platform and select Access Control.

- Go back to your workspace. From the navigation bar, select App Templates and click tidb-operator.

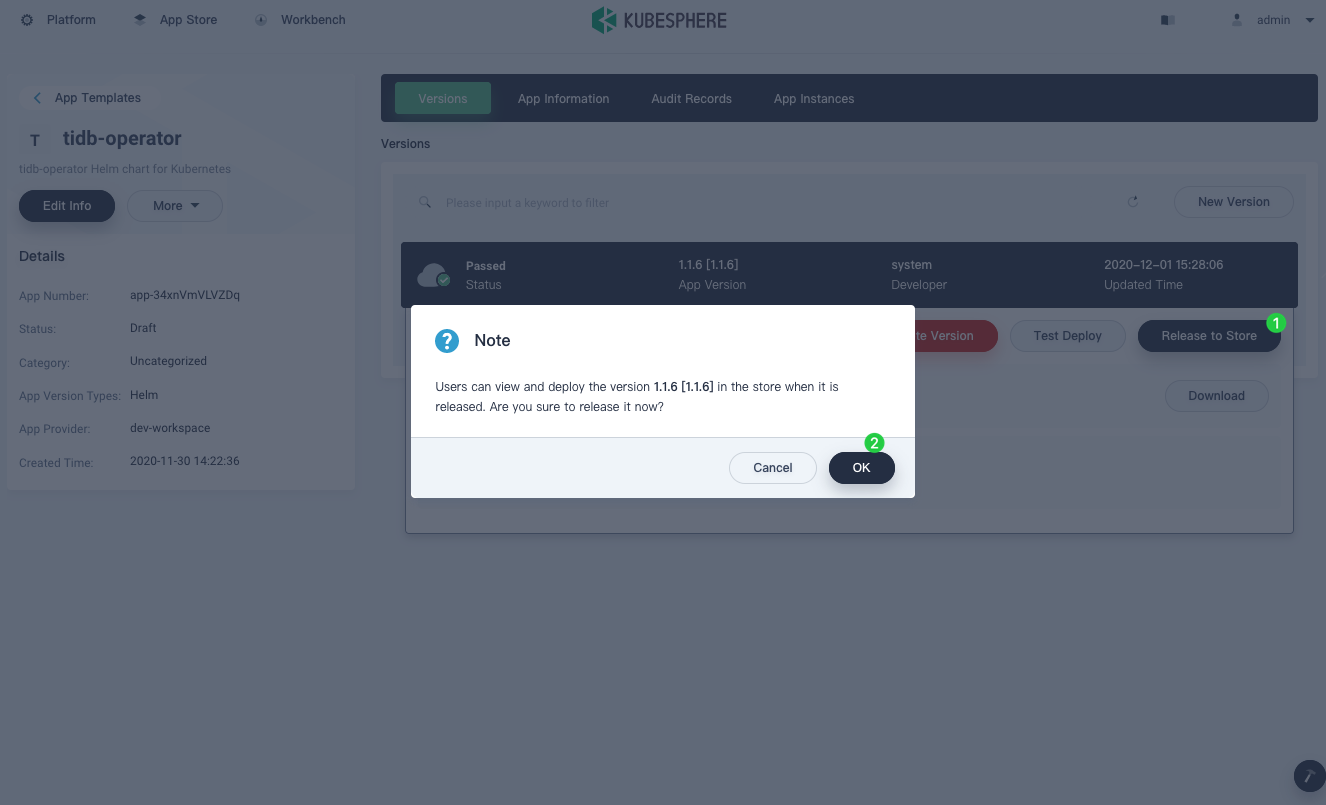

- On the Versions page, click the version number again, and you can see that the status has reached Passed. The Submit Review button has changed to Release to Store.

- Click Release to Store, and in the confirmation box, click OK.

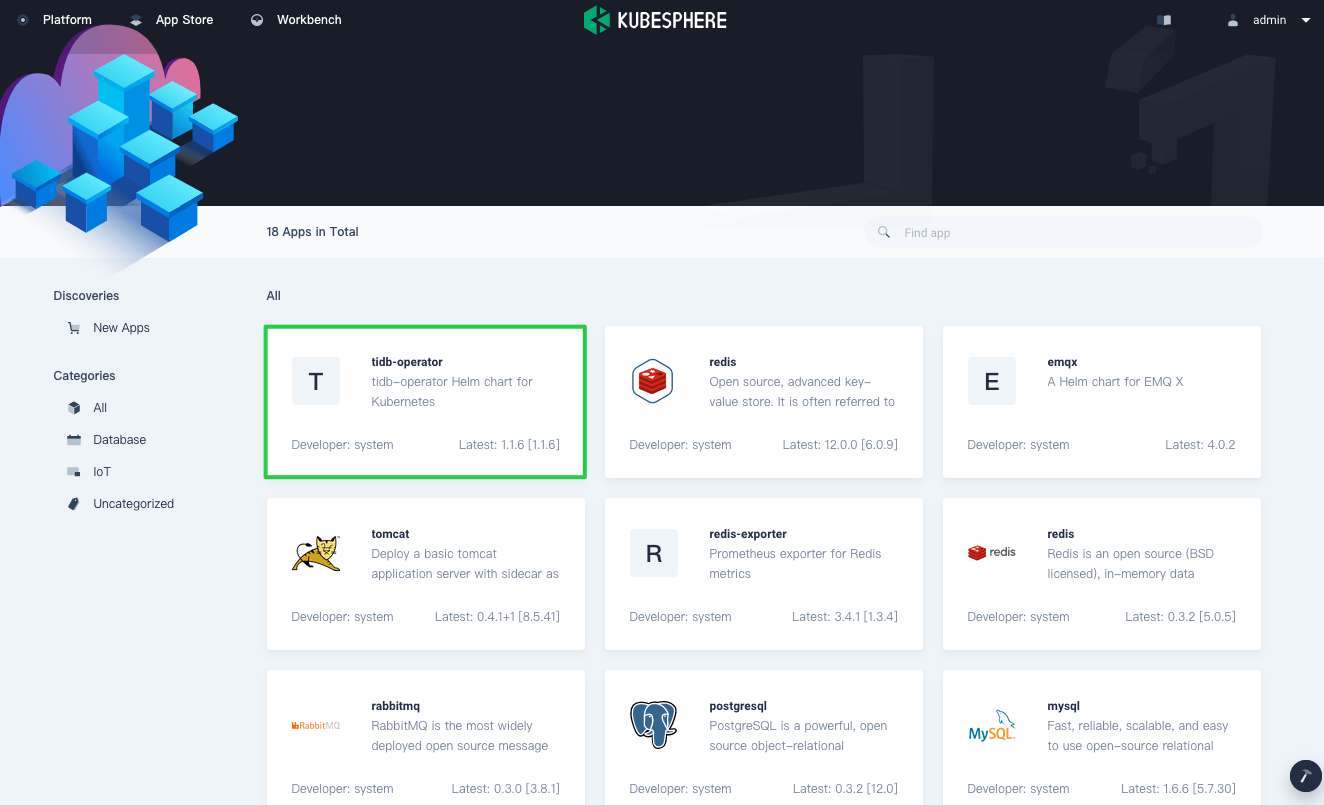

- To view the app released, in the top left corner, click App Store, and you can see it in the App Store. Likewise, you can use the same steps to deploy tidb-cluster to the App Store.

For more information about how to deploy an app from the App Store, see the KubeSphere documentation. You can also see Application Lifecycle Management to learn more about how an app is managed across its entire lifecycle.

Summary

Both TiDB and KubeSphere are powerful tools for us as we deploy containerized applications and use the distributed database on the cloud. As a big fan of open source, I hope both groups can continue to deliver efficient and effective cloud-native tools for us in production.

If you have any questions, don’t hesitate to contact us in Slack or GitHub.

References

Spin up a database with 25 GiB free resources.

TiDB Cloud Dedicated

A fully-managed cloud DBaaS for predictable workloads

TiDB Cloud Starter

A fully-managed cloud DBaaS for auto-scaling workloads