Satya Nadella, the CEO of Microsoft, once said, “Every company is now a software company.” As a system architect, I cannot agree with him more. Especially since the pandemic, more businesses and users are on the internet, and all their activities eventually become increasing workloads for servers, applications, and systems.

Scalability becomes a challenge. When your server does not have enough resources to handle the increasing load on your application, what do you usually do? Buy more RAM, add CPU cores, and add disks? These solutions are known as vertical scale or scale-up. But what if you max out your current resources, but the system still can’t handle the workload? One popular option is to add more machines and scale out your system further.

Here’s a better option: a hybrid approach. When you design an application, you can use streaming, pipelining, and parallelization to maximize the utilization of resources on a single node. Then, you can add additional hardware resources to scale your system out or up.

Increasing throughput without additional hardware resources

To describe the performance of a system, we need to consider both the input and output.

Performance = workload / resource consumption

We have two major scenarios: one focuses on operations per second (OPS) and the other on throughput. These two are the most important ways to measure system performance. The OPS type can be equivalent to the throughput type with certain constraints or optimization. Let’s talk about the throughput type, which is more fundamental in this article.

For example, the main goal of your system might be higher Queries per Second (QPS), but throughput can be equivalent to QPS. This is true for a lot of Online Transactional Processing (OLTP) systems and API-based services, where the average data load for each transaction is about the same. For Online Analytical Processing (OLAP) or other systems designed to handle huge data loads, higher throughput will be the focus. We also see the trend of more and more systems that need a mixed load, like a Hybrid Transactional and Analytical Processing (HTAP) database that supports both high QPS and high throughput. Using throughput to measure the workload can also be applied to those systems.

Fully consuming system resources with better scheduling

To better understand how resources can be used effectively—or not—let’s look at the cooking-themed video game “Overcooked.” The goal is to serve your customers. You take their order, cook the food, and serve it. During cooking, you may need to chop ingredients, combine them, and cook them in a pot. Let’s look at this process more closely and see how things can quickly back up.

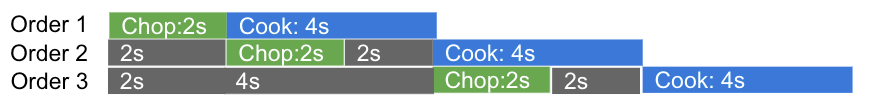

Let’s say chopping takes two seconds, and cooking takes four seconds. If we take one order at a time, each order will take six seconds. If three customers come in at the same time, the first customer will wait for six seconds, the second customer for 12 seconds, and the third 18 seconds. The longer your customers wait, the more likely they will lose patience and leave. If you don’t have enough paying customers, you’ll go out of business.

So, how do you speed things up and win the game? We want to process multiple orders at the same time: while Order 1 is on the cooktop, we’re chopping the ingredients for Order 2. When we put Order 2 on the cooktop, we start chopping Order 3. With this new scheduling strategy, Customer 1 still waits for six seconds, but Customers 2 and 3 each wait four seconds less: 8 and 14 seconds, respectively.

Process multiple orders at the same time

Since the cooktop stage takes the longest time, it determines how long it takes to complete each order. This is our “bottleneck resource.” We can see from the figure above that after chopping, Order 2 and Order 3 have to wait for two seconds before they can move on to the cooking stage. We’ll only be able to process the maximum number of orders if we keep the cooktop in constant use—no downtime.

The scheduling for the computer is similar. The code to be executed is the throughput; they’re like the orders in the cooking game. System resources are like the chopping board and cooktops. In a perfect world, all the resources are fully utilized during the running time, as shown in the chart below.

Ideally, all the resources are fully utilized

We can achieve this if we upgrade the cooktop. It will only take two seconds, the same as the chopping time, to cook the meal on the cooktop. In this case, the chopping service has no idle time.

To shorten the cooking time, we can upgrade the cooktop

However, this kind of efficiency is rare. A more realistic goal would be that, while the application is running, the bottleneck resource (the CPU) is 100% used. If the other resources are not used 100%, that’s OK.

Fully utilize the bottleneck resource

In contrast, poorly designed applications do not use the bottleneck resource at 100% all the time. In fact, they may never use it that much.

Bad apps never fully use the bottleneck resource

To summarize, we evaluate the performance of a system by the throughput it can achieve with fixed system resources. A good application design enables higher throughput with fixed system resources by fully using the bottleneck resource.

Next, we will take a look at how streaming, pipelining, and parallelization can help to achieve the resource utilization goal.

Achieving maximum resource utilization

Breaking down a large task with streaming

“Streaming” can mean different things in different contexts. For example, “event streaming” is a set of events that occur one after another like a stream. In the context of this article, streaming means breaking a big task into smaller chunks and processing them in sequence. For example, to download a file from a web browser, we break the file into smaller data blocks, download one data block, write it to our local disk system, and repeat the process until the whole file is downloaded.

Streaming has several benefits. Before streaming, one large task could use all resources during a period and block other smaller tasks from using resources. It is like waiting in line at the Department of Motor Vehicles (DMV). Even though I just needed to renew my driver’s license with my pre-filled form, which took less than 5 minutes, I had to wait 20 minutes while the customer ahead of me finished his application.

Streaming can reduce peak resource utilization—whether you’re downloading a file or waiting in line at the DMV. What’s more, streaming reduces the cost of failed tasks. For example, if your download fails in the middle, it doesn’t have to start over. It just picks up from where it failed and continues with the remaining data blocks.

Therefore, breaking down one big task into smaller chunks enables scheduling and opens the door for multiple ways of optimizing resource utilization. It’s fundamental for our next step: pipelining.

Folding the processing time with pipelining

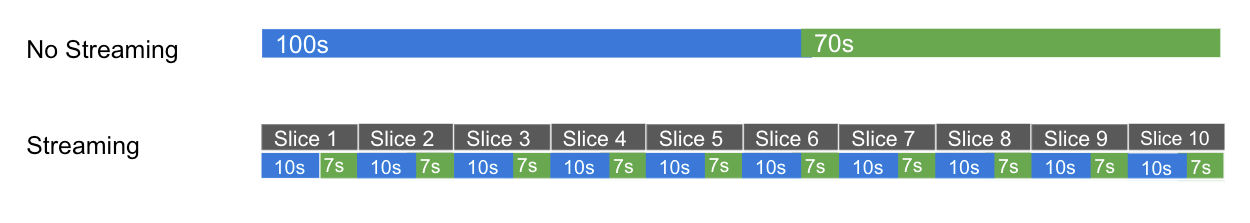

Assume that an application is running without a streaming design, and it takes two steps. Step 1 is encoding. It consumes CPU resources and takes 100 s. Step 2 is writing to disk. It consumes I/O bandwidth and takes 70 s. Overall, it takes 100 s + 70 s = 170 s to run this application.

With streaming, we break this one task into ten atomic slices. Each slice follows the same two steps but takes only 1/10 of the time. The overall task still takes the same amount of time: (10 s + 7 s) * 10 = 170 s. But this approach reduces memory usage since we are not saving a huge chunk of data in the disk all at the same time. This approach also reduces failure cost. (We ignore the transaction breakdown overhead here; we will discuss this in the implementation section.)

The time consumption of a task with/without streaming

If we add pipelining to this picture, when Slice 1 finishes using CPU, Slice 2 can start using it immediately. Slice 2 doesn’t have to wait for Slice 1 to finish I/O consumption. When Slice 2 finishes using CPU, Slice 3 can start using it. Now the overall processing time is 10 s * 10 + 7 s = 107 s. With pipelining, we just reduced our task time by 63s—more than one-third of the original time!

WIth streaming and pipelining, the time consumption can be reduced

The benefit of using pipelining is obvious. Timefold significantly reduces the processing time. The application can process more and produce higher throughput in the same time period.

The expected processing time should be reduced to about the same amount as the most time-consuming step in the original process. In the above example, without streaming and pipelining, the encoding step takes 100 s, and our new flow, which uses those techniques, takes 107 s. They are about the same level.

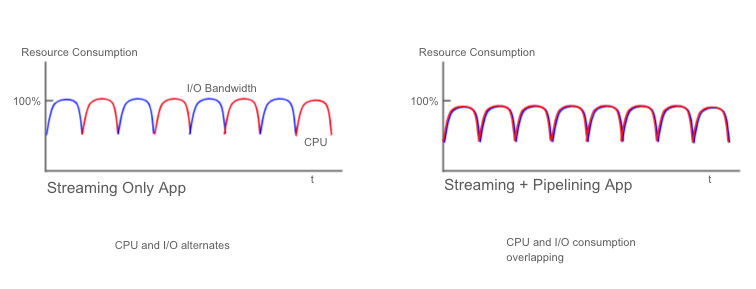

Also, we are maximizing the utilization of the bottleneck resource—CPU. The CPU does not have to wait for the I/O step to finish before it starts processing the next slice. Idle time is wasted time. So, we are one step closer to our performance goal as shown below.

Ideally, the bottleneck resource should be fully utilized

The two diagrams below show how our resource consumption has changed. We’ve gone from “CPU and I/O alternate” to “CPU and I/O consumption overlaps.”

Pipelining applies to continuously running applications as well. Using timefold can flatten the consumption curve for a single resource. From here, we can apply the parallelization strategy and gain even more efficiency.

Maximizing resource consumption with parallelization

Streaming and pipelining break down tasks into smaller actions, and the consumption curve for one resource is close to flat, although it does not reach 100%. We can apply parallelization to fully utilize the bottleneck resource and achieve high performance; for example, 100% CPU occupation.

Going back to our cooking example, streaming is like breaking down cooking a dish to cooking the meat, cooking the noodles, and cooking the mushrooms. Pipelining is like breaking down the cooking stage to chopping the meat and cooking the meat, and while the meat is cooking, chopping the mushrooms. And what is parallelization? You are right: it means we will have different players working together, and we can cook multiple orders at the same time. With a good scheduling strategy, different players can work together to have the bottleneck resource—the cooktop—running non-stop.

In daily life, our computers use parallelization all the time. For example, loading a web page uses parallelization. Right after you enter the address, your browser loads the HTML page. At the same time, your browser also starts multiple threads to load all the pictures on the HTML page.

Parallelization is more effective when we apply it to threads that already have a flat resource consumption curve. It can be applied to a simple process that contains only one step and is smoothly consuming the resource by default or to complex processes that use streaming and pipelining. (The next article in this series discusses how to implement different parallelization strategies.)

We have talked a lot about what we should do. But it is easy to ignore what we should NOT do. We shouldn’t apply parallelization to processes that do not smoothly consume resources or to processes where we can’t control which resources are being consumed. In those cases, we are giving the operating system the power to decide resource allocation.

Now you understand the basics of streaming, pipelining, and parallelization. Join us for Part II of this series, where we discuss how to implement these methods. We’ll also consider strategies on which combination of methods you can use to meet your specific needs.

If you want to learn more about app development, feel free to contact us through Twitter or our Slack channel. You can also check our website for more solutions on how to supercharge your data-intensive applications.

Keep reading:

Using Streaming, Pipelining, and Parallelization to Build High Throughput Apps (Part II)

Building a Web Application with Spring Boot and TiDB

Using Retool and TiDB Cloud to Build a Real-Time Kanban in 30 Minutes

Spin up a Serverless database with 25GiB free resources.

TiDB Cloud Dedicated

A fully-managed cloud DBaaS for predictable workloads

TiDB Cloud Serverless

A fully-managed cloud DBaaS for auto-scaling workloads