One of the hallmarks of Web3 is the effort to monetize data. For many companies, data has become one of their most valuable assets. However, to get the most value from that data, it must be stored and analyzed more efficiently. Customers are creating more complex queries and demanding faster results, traditional relational databases can barely keep up. Take MySQL as an example. Its performance usually begins to drop when table data reaches tens of millions of rows. Sharding is a common solution, but it’s a complicated task. Traditional Online Analytical Processing (OLAP) databases can’t meet such demands either; they can only handle very low query concurrency.

This post introduces an efficient and simpler solution to this problem: TiDB + Amazon Web Services (AWS). TiDB is an open source, Hybrid Transactional and Analytical Processing (HTAP) database that’s compatible with MySQL and horizontally scalable. AWS is a popular cloud service provider. This solution has been proven efficient in the production environment by a global financial services company. They leverage TiDB to build their data-intensive financial dashboard, and store, process, and analyze their ever-increasing data while limiting the latency within milliseconds.

In this post, we’ll also use a blockchain analytics application as an example, to elaborate on how TiDB on AWS supports it, process and analyze data in a single table with over 100 million rows, and return the query results within seconds.

Requirements for an analytics application for blockchain data

In Web 3, distributed blockchain technologies are one of the most prominent “figures,” and blockchain data is growing exponentially. For example, some of the archived nodes of the popular blockchain technology Ethereum store more than 10 terabytes of data with over 1 billion transactions, and Ethereum processes more than one million new transactions each day. What’s even more challenging is that many businesses must process and analyze these huge amounts of data in real time.

To do this, they often build centralized analytics applications to store and aggregate the data and adopt databases or big data technologies to provide complete analytics. In a blockchain analytics application, data is the most important thing. The application must:

- Deal with huge amounts of data. The blockchain data contains all the data from each public chain. This can be terabytes. Some large chains have tens of billions of rows of data.

- Respond to queries in real time. The latest transactions and data on each public chain should be synchronized in real time to give users more accurate analytical results.

- Have high performance. The application must process a large amount of queries at the same time, even when new data is being written and synchronized.

- Have low Latency. To provide a good user experience, all queries and page loading should be finished within one second.

- Scale out horizontally. When the data volume or queries grow rapidly, the application system should scale out horizontally with no downtime and with minimal business impact.

- Be easy to operate and maintain. To reduce costs, system operation and maintenance should be easy and simple.

- Have a simple technology stack. A simple technology stack makes daily work and product development easier. This is especially important if you have a small development team.

- Have a smart and simplified architecture. The system should have a cloud-native architecture that leverages hosted services such as AWS. This provides flexible scalability.

Using TiDB as the database solution

The database is the core of a blockchain data analytics application. In the following diagram, TiDB supports an analytics application for Ethereum to store and analyze the blockchain data.

TiDB supporting an application for Ethereum

To make the application easy to manage and scale, it is recommended to deploy TiDB on the cloud. Here, we deploy TiDB on AWS. The diagram below shows the architecture of this analytics application where TiDB is deployed on Amazon Elastic Kubernetes Service (EKS).

TiDB on Amazon EKS architecture

Using TiDB on EKS to support this application has the following advantages:

- TiDB stores the data and also handles queries and analytics.

- Computing and storage are separated.

- TiDB is fully MySQL compatible.

- TiDB on EKS makes the application easy to scale.

- TiDB performance is monitored by two popular open source monitoring systems in real time: Prometheus and Grafana.

We also recommend that you deploy TiDB Operator, an automatic operation system for TiDB clusters in Kubernetes, on Amazon EKS as well. This way, you can fully adopt the Kubernetes methods to deploy and manage TiDB clusters.

For example, to scale in or scale out TiDB clusters, just change the value of the number of replicas in the YAML file. This is shown below.

Configuring TiDB clusters in the YAML file

NOTE: Instead of deploying and configuring TiDB manually on AWS EKS, you can also try TiDB Serverless, a fully managed Database-as-a-Service (DBaaS) of TiDB. TiDB Serverless provides the same robust performance as TiDB with added flexibility and ease of scaling, making it a compelling choice for dynamic workloads and fluctuating data demands.

Import data into TiDB

Preparation

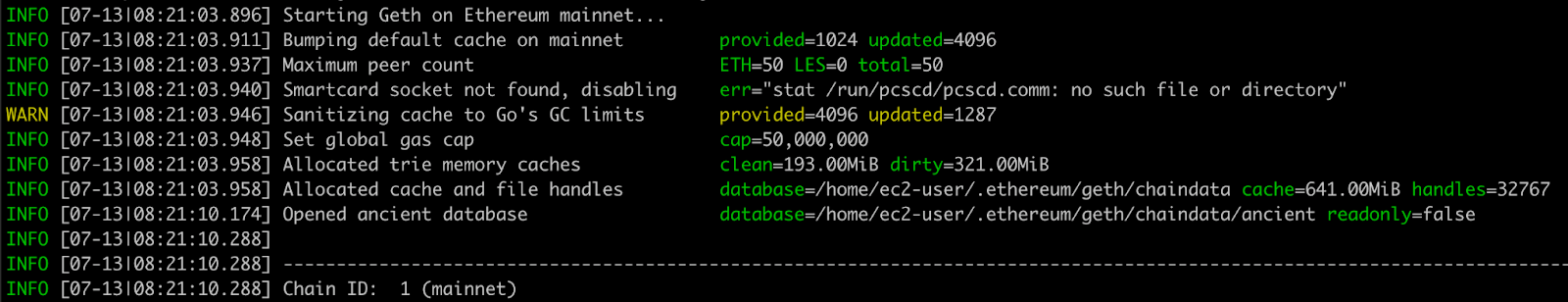

- You need to set up the Ethereum nodes.

- Download and run Geth on Amazon EC2 to synchronize the blockchain data.

Note: You can also use other tools such as Parity to set up Ethereum nodes.> nohup geth [ --syncmode fast | full| light ] &

- On Amazon EKS, deploy TiDB. For a detailed guide, refer to the documentation Deploy TiDB on AWS EKS.

- Connect the Ethereum nodes with TiDB. TiDB is fully MySQL compatible, so you can use any MySQL client to connect to it.

- On TiDB, create tables to store the blockchain data. Here, we create two sample tables:

blockchain.eth_blockandblockchain.eth_transactions. For more information on table structures, refer to the schema description documentation.

CREATE DATABASE blockchain;

CREATE TABLE `blockchain.eth_block` (

`hash` char(66) NOT NULL PRIMARY KEY,

`number` bigint,

`parent_hash` char(66),

`nonce` bigint,

`gas_limit` bigint,

`gas_used` bigint,

`transaction_count` bigint,

`extra_data` text,

`logs` text,

……

)

CREATE TABLE `blockchain.eth_transactions` (

`hash` char(66),

`nonce` bigint,

`block_hash` char(66),

`block_number` bigint,

`transaction_index` bigint,

`from_address` char(42),

`to_address` char(42),

`value` double,

`gas` bigint,

`gas_price` bigint,

`input` mediumtext,

……

)

Importing data to TiDB

To import the data from the Ethereum nodes to TiDB, we can use Ethereum ETL, an open-source extract, transform, load (ETL) tool. We recommend that you download its latest version.

Run the following commands to import data from Ethereum nodes to TiDB.

> ethereumetl stream -e block,transaction --start-block <start_position> --end-block <end_position> --provider-uri file://<your_path>/geth.ipc --output=mysql+pymysql://<username>:<password>@<tidb_endpoint>:<port>/<dbname>[?<options>]

Importing data to TiDB

When an Ethereuma node starts, it creates a geth.ipc file, which allows other services to attach to that server and read the data. The commands above read the blockchain data and transactions from the geth.ipc files on each Ethereum node, and write them to the blockchain.eth_block table and blockchain.eth_transactions table we created earlier.

Verification

After the data importing process is done, you can write SQL statements via a MySQL client to verify whether the data is imported to TiDB correctly. We show three examples below.

- Verify whether the number of rows written to TiDB is correct:

SELECT count(*) FROM blockchain.eth_blocks;

SELECT count(*) FROM blockchain eth_transaction;- Verify that the transaction records for a specific address are correct:

SELECT FROM from_address, to_address, time_at from blockchain.eth_transaction where from_addr = '0x1XXXXXXXXXXX48';- Verify that the number of Ethereum transactions is correct:

SELECT sum(value) AS TOTAL FROM transactions;Benchmarking

To implement this analytics application, you need to store massive amounts of data in a single table. This requires high database performance. To monitor TiDB’s performance, we’ll simulate some stress tests.

There are several ways to benchmark TiDB. Here, we use Sysbench, a popular open-source database benchmarking tool. We’ll test TiDB performance when it processes 50 tables, each with 100 million rows of data, and a total size of about one terabyte.

The tests use four Amazon EKS clusters with the following configurations:

- Standard-3: 3 TiDB clusters, 3 TiKV clusters, and 3 Placement Driver (PD) clusters

| Service type | EC2 type | Instances |

| PD | m5.xlarge (gp3 20GB 3000 IOPS 125MB/s) | 3 |

| TiKV | i3.4xlarge (2 x 1,900 GB NVMe SSD) | 3 |

| TiDB | c5.4xlarge (gp3 20GB 3000 IOPS 125MB/s) | 3 |

- Hybrid-6: 6 TiDB clusters, 6 TiKV clusters, and 3 PD clusters

| Service type | EC2 type | Instances |

| PD | m5.xlarge (gp3 20GB 3000 IOPS 125MB/s) | 3 |

| TiDB | i3.4xlarge (2 x 1900 GB NVMe SSD) | 6 |

| TiKV | i3.4xlarge (2 x 1,900 GB NVMe SSD) | 6 |

- Hybrid-12: 12 TiDB clusters, 12 TiKV clusters, and 3 PD clusters

| Service type | EC2 type | Instances |

| PD | m5.xlarge (gp3 20GB 3000 IOPS 125MB/s) | 3 |

| TiDB | i3.4xlarge (2 x 1900 GB NVMe SSD) | 12 |

| TiKV | i3.4xlarge (2 x 1,900 GB NVMe SSD) | 12 |

- Hybrid-18: 18 TiDB clusters, 18 TiKV clusters, and 3 PD clusters

| Service type | EC2 type | Instances |

| PD | m5.xlarge (gp3 20GB 3000 IOPS 125MB/s) | 3 |

| TiDB | i3.4xlarge (2 x 1900 GB NVMe SSD) | 18 |

| TiKV | i3.4xlarge (2 x 1,900 GB NVMe SSD) | 18 |

We test TiDB performance mainly in three scenarios: point select, read & write, and write only.

Point select

Run Sysbench:

> sysbench —config-file=config oltp_point_select —tables=50 — table-size=100000000 runSysbench results:

| point_select 50 tables (100m rows per table) 1TB data in total |

||||

| TiDB | Standard-3 | Hybrid-6 | Hybrid-12 | Hybrid-18 |

| Threads | 2500 | 3500 | 4000 | 10000 |

| Max QPS | 72,035 | 121,559 | 293,274 | 482,598 |

| 95% latency (ms) | 44.17~137.35 | 34.33~82.96 | 20.74~56.84 | 15~44.17 |

Read & write

Run Sysbench:

> sysbench —config-file=config oltp_read_write —tables=50 — table-size=100000000 runSysbench results:

| read_write 50 tables (100m rows per table) 1TB data in total |

||||

| TiDB | Standard-3 | Hybrid-6 | Hybrid-12 | Hybrid-18 |

| Threads | 2500 | 3500 | 4000 | 10000 |

| Max QPS | 61,048.60 | 55,872.14 | 112,274.7 | 170,125.06 |

| 95% latency (ms) | 475.79~1836.24 | 601.29~2120.76 | 314.45~1191.92 | 287.38~787.74 |

Write only

Run Sysbench:

> sysbench —config-file=config oltp_write_only —tables=50 — table-size=100000000 runSysbench results:

| write_only 50 tables (100m rows per table) 1TB data in total | ||||

| TiDB | Standard-3 | Hybrid-6 | Hybrid-12 | Hybrid-18 |

| Threads | 2500 | 3500 | 4000 | 10000 |

| Max QPS | 31,375.17 | 40,469.28 | 78,566.19 | 119,137.42 |

| 95% latency (ms) | 325.98~1089.3 | 292.6~1050.76 | 150.29~569.67 | 114.72~390.3 |

The test results show that as the number of threads grows, TiDB clusters scale out, the maximum QPS increases significantly, and the 95% latency is reduced.

Note:

- The stress tests don’t limit the usage of CPU resources, so latency may be relatively high compared to a production environment.

Conclusion

Sysbench results show that TiDB can handle both continuously increasing business traffic or sudden spikes while keeping the latency stable and low. A services provider has used this solution to build an advanced DeFi Dashboard that covers data on dozens of public chains. TiDB performs well. The volume of data a single TiDB cluster stores exceeds 100 terabytes, and is still growing. The average QPS is between 25,000 and 30,000, and the P99 latency is around 50 ms.

If you are building data-intensive applications that need to process and analyze large amounts of data without slowing down your business, consider a solution with TiDB deployed on Amazon EKS. It can easily scale in or out without impacting your business, and it can handle both OLTP and OLAP workloads. In addition, you can also try TiDB Serverless offers an additional option that brings the benefits of serverless architecture to the table. TiDB Serverless is a flexible way to test the power of TiDB yourself. It inherits all of TiDB’s core capabilities, including high performance, scalability, and reliability. It also takes advantage of serverless architecture to provide rapid adaptation to changing data demands. To learn more, sign up for free.

Keep reading:

How to Choose the Right Database for Your NFT Marketplace

Building a Web Application with Spring Boot and TiDB

Building an E-Commerce System with TiDB Cloud and Snowflake

Want to explore TiDB without installing any software? Go to TiDB Playground

Experience modern data infrastructure firsthand.

TiDB Cloud Dedicated

A fully-managed cloud DBaaS for predictable workloads

TiDB Cloud Serverless

A fully-managed cloud DBaaS for auto-scaling workloads