Author: Ron Xing (Customer Support Engineer at PingCAP)

Transcreator: Caitin Chen; Editor: Tom Dewan

Benchmark purpose

The following tests compare the performance of TiDB, a MySQL compatible NewSQL database, running on an Arm-based Amazon Elastic Kubernetes Service (EKS) cluster and on an x86-based EKS cluster. The tests use an Online Transactional Processing (OLTP) workload, and the benchmarking tools are TPC-C and sysbench. For more information on the inspiration of the benchmark, please refer to AWS Startups Blog.

Benchmark environment

The test used two EKS clusters with the following instance types and topology.

Instance types

The following table summarizes all the EC2 instances we used in the EKS clusters as well as the benchmark node.

| Instance size (CPU Architecture) | vCPUs | Memory (GiB) | Instance storage (GB) | Network bandwidth (Gbps) | EBS bandwidth (Mbps) |

| c6g.large(Arm) | 4 | 8 | EBS-only | Up to 10 | Up to 4,750 |

| c6g.2xlarge(Arm) | 8 | 16 | EBS-only | Up to 10 | Up to 4,750 |

| r6g.2xlarge(Arm) | 8 | 64 | EBS-only | Up to 10 | Up to 4,750 |

| c5.large(x86) | 4 | 8 | EBS-only | Up to 10 | Up to 4,750 |

| c5.2xlarge(x86) | 8 | 16 | EBS-only | Up to 10 | Up to 4,750 |

| r5.2xlarge(x86) | 8 | 64 | EBS-only | Up to 10 | Up to 4,750 |

| c5.4xlarge(x86) | 16 | 32 | EBS-only | 10 | 4,750 |

Storage types

The following table summarizes the disk that we used for different components.

| Service type | Storage | Size (GB) | IOPS | Throughput (MiB/s) | Instances |

| TiKV | EBS gp3 | 1,000 | 16,000 | 600 | 3 |

| PD | EBS gp2 | 50 | 150 | 128 | 1 |

| TPC-C/sysbench | EBS gp3 | 1,500 | 16,000 | 1,000 | 1 |

Topology

We used one c5.4xlarge EC2 instance as a benchmark node where TPC-C and sysbench are deployed.

Each EKS cluster consists of seven worker nodes and one admin (control plane) node. Seven worker nodes serve as dedicated TiDB, TiKV, and PD nodes.

To better understand the role of each component and architecture diagram, please refer to AWS Startups Blog.

| Cluster/processor | Service type | EC2 type | Instances |

| Cluster 1: Graviton2 Arm | TiDB | c6g.2xlarge | 3 |

| TiKV | r6g.2xlarge | 3 | |

| PD | c6g.large | 1 | |

| Admin node | c6g.large | 1 | |

| Cluster 2: Intel Xeon Platinum 8000 series | TiDB | c5.2xlarge | 3 |

| TiKV | r5.2xlarge | 3 | |

| PD | c5.large | 1 | |

| Admin node | c5.large | 1 |

Software version

The TiDB cluster software versions and the sysbench tool version are listed below.

| Service type | Software version |

| TiDB | v4.0.10 |

| TiKV | v4.0.10 |

| PD | v4.0.10 |

| TPC-C (embedded with TiUP) | v1.0.8 |

| sysbench | v1.0.20 |

Cost

In the following cost examples:

- Cost calculations are based on the on-demand rate for instances in the US West (Oregon) and Asia Pacific (Singapore) regions in US dollars (USD) per month.

- Monthly calculations are based on 730 hours of usage per month.

Storage

In the following table, the total cost per month includes a daily snapshot.

| Volume type | Size (GB) | IOPS | Throughput (MiB/s) | Instances | Monthly cost (US) | Monthly cost (APAC) |

| gp3 | 1,000 |

16,000 |

600 |

3 |

648.27 |

746.58 |

| gp2 | 50 |

100 |

128 |

1 |

9.75 |

10.75 |

EC2

We used the following Graviton2 Arm processor based instances. Note that unit prices are based on the rate in both the US region and the APAC region.

| Service type | EC2 type | Instances | US region | APAC region | ||

| Unit price (USD/hr) | Monthly cost | Unit price (USD/hr) | Monthly cost | |||

| TiDB | c6g.2xlarge |

3 |

0.272 |

595.68 |

0.3136 |

686.78 |

| TiKV | r6g.2xlarge |

3 |

0.4032 |

883.01 |

0.4864 |

1,065.21 |

| PD | c6g.large |

1 |

0.068 |

49.64 |

0.0784 |

57.23 |

| Control plane | c6g.large |

1 |

0.068 |

49.64 |

0.0784 |

57.23 |

| Total |

1,577.97 |

1,866.46 |

||||

Here are the configurations and costs of our Intel Xeon Platinum 8000 series processors based instances:

| Service type | EC2 type | Instances | US region | APAC region | ||

| Unit price (USD/hr) | Monthly cost | Unit price (USD/hr) | Monthly cost | |||

| TiDB | c5.2xlarge |

3 |

0.34 |

744.60 |

0.392 |

858.48 |

| TiKV | r5.2xlarge |

3 |

0.504 |

1,103.76 |

0.608 |

1,331.52 |

| PD | c5.large |

1 |

0.085 |

62.05 |

0.098 |

71.54 |

| Control plane | c5.large |

1 |

0.085 |

62.05 |

0.098 |

71.54 |

| Total |

1,972.46 |

2,333.08 |

||||

Total cost

The following tables summarize the total costs per month in the US West (Oregon) and Asian Pacific (Singapore) regions. All costs are in US dollars per month.

AWS US West (Oregon)

| CPU type | EC2 cost | Storage cost | EKS cost | Total cost |

| Arm |

1,577.97 |

740.86 |

73.00 |

2,391.83 |

| x86 |

1,972.46 |

740.86 |

73.00 |

2,786.32 |

AWS Asia Pacific (Singapore)

| CPU type | EC2 cost | Storage cost | EKS cost | Total cost |

| Arm |

1,866.46 |

856.71 |

73.00 |

2,796.17 |

| x86 |

2,333.08 |

856.71 |

73.00 |

3,262.79 |

Preparation

To deploy a TiDB cluster on an x86-based EKS cluster, follow the steps in Deploy TiDB on AWS EKS.

To deploy a TiDB cluster on an Arm-based EKS cluster, follow the steps in TiDB Deployment on Graviton2-based EKS. The following temporary Arm images are used for benchmarking:

- pingcap2021/tidb-operator:v1.1.11

- pingcap2021/pd:v4.0.10

- pingcap2021/tikv:v4.0.10

- pingcap2021/tidb:v4.0.10

- pingcap2021/tidb-monitor-initializer:v4.0.10

Above images are temporary and not meant for a production environment. Stay tuned for the official Arm images.

TPC-C benchmark

As you review the following benchmark tests, keep in mind that these are preliminary results. They should not be considered official TPC-C results.

To facilitate benchmarking, TiUP has integrated the bench component, which provides two workloads for stress testing: TPC-C and TPC-H. The commands and flags are as follows:

tiup bench

Starting component `bench`: /Users/joshua/.tiup/components/bench/v0.0.1/bench

Benchmark database with different workloads

Usage:

tiup bench [command]

Available Commands:

help Help about any command

tpcc

tpch

Flags:

--count int Total execution count, 0 means infinite

-D, --db string Database name (default "test")

-d, --driver string Database driver: mysql

--dropdata Cleanup data before prepare

-h, --help help for /Users/joshua/.tiup/components/bench/v0.0.1/bench

-H, --host string Database host (default "127.0.0.1")

--ignore-error Ignore error when running workload

--interval duration Output interval time (default 10s)

--isolation int Isolation Level 0: Default, 1: ReadUncommitted,

2: ReadCommitted, 3: WriteCommitted, 4: RepeatableRead,

5: Snapshot, 6: Serializable, 7: Linerizable

--max-procs int runtime.GOMAXPROCS

-p, --password string Database password

-P, --port int Database port (default 4000)

--pprof string Address of pprof endpoint

--silence Do not print error when running workload

--summary Print summary TPM only, or also print current TPM when running workload

-T, --threads int Thread concurrency (default 16)

--time duration Total execution time (default 2562047h47m16.854775807s)

-U, --user string Database user (default "root")

For TPC-C, the TiUP bench component supports the following commands and flags to run the test:

tiup bench tpcc

Available Commands:

check Check data consistency for the workload

cleanup Cleanup data for the workload

prepare Prepare data for the workload

run Run workload

Flags:

--check-all Run all consistency checks

-h, --help help for tpcc

--output string Output directory for generating csv file when preparing data

--parts int Number to partition warehouses (default 1)

--tables string Specified tables for generating file, separated by ','. Valid only if output is set. If this flag is not set, generate all tables by default.

--warehouses int Number of warehouses (default 10)

TPC-C workloads

This table summarizes the workloads we used, both in terms of the number of warehouses and the data sizes.

| Workload | Warehouses | Data size |

| Lagre1 | 5000 | ~500 GB |

| Large2 | 10000 | ~1 TB |

TPC-C test procedures

- On the benchmark VM (c5.4xlarge), deploy the latest version of TiUP.

- Create warehouses. You should specify the hostname as the load balancer’s DNS name since we deployed the TiDB in EKS and exposed the database service as the LoadBalancer type:

tiup bench tpcc --warehouses 10000 --host xxxxxxxxxxx.elb.us-west-2.amazonaws.com prepare - Run the TPC-C test for different threads. (We used 150, 300, 500, 800, and 1000). Each test runs for 30 minutes.

tiup bench tpcc --warehouses 10000 --host xxxxxxxxxxx.elb.us-west-2.amazonaws.com --threads 150 --time 10m run - Note the tpmC result for each test case. The following is sample output:

Finished [Summary] DELIVERY - Takes(s): 1796.7, Count: 88527, TPM: 2956.3, Sum(ms): 242093840, Avg(ms): 2734, 90th(ms): 4000, 99th(ms): 8000, 99.9th(ms): 8000 [Summary] DELIVERY_ERR - Takes(s): 1796.7, Count: 133, TPM: 4.4, Sum(ms): 206560, Avg(ms): 1553, 90th(ms): 4000, 99th(ms): 4000, 99.9th(ms): 8000 [Summary] NEW_ORDER - Takes(s): 1798.8, Count: 1002915, TPM: 33453.0, Sum(ms): 916326214, Avg(ms): 913, 90th(ms): 1500, 99th(ms): 2000, 99.9th(ms): 4000 [Summary] NEW_ORDER_ERR - Takes(s): 1798.8, Count: 319, TPM: 10.6, Sum(ms): 118662, Avg(ms): 371, 90th(ms): 1000, 99th(ms): 1500, 99.9th(ms): 1500 [Summary] ORDER_STATUS - Takes(s): 1798.9, Count: 89022, TPM: 2969.3, Sum(ms): 4346202, Avg(ms): 48, 90th(ms): 80, 99th(ms): 160, 99.9th(ms): 512 [Summary] ORDER_STATUS_ERR - Takes(s): 1798.9, Count: 1, TPM: 0.0, Sum(ms): 19, Avg(ms): 19, 90th(ms): 20, 99th(ms): 20, 99.9th(ms): 20 [Summary] PAYMENT - Takes(s): 1798.9, Count: 956516, TPM: 31903.7, Sum(ms): 628421123, Avg(ms): 656, 90th(ms): 1000, 99th(ms): 1500, 99.9th(ms): 2000 [Summary] PAYMENT_ERR - Takes(s): 1798.9, Count: 201, TPM: 6.7, Sum(ms): 46899, Avg(ms): 233, 90th(ms): 512, 99th(ms): 1000, 99.9th(ms): 1000 [Summary] STOCK_LEVEL - Takes(s): 1798.9, Count: 89370, TPM: 2980.8, Sum(ms): 6052088, Avg(ms): 67, 90th(ms): 112, 99th(ms): 256, 99.9th(ms): 512 [Summary] STOCK_LEVEL_ERR - Takes(s): 1798.9, Count: 3, TPM: 0.1, Sum(ms): 342, Avg(ms): 114, 90th(ms): 192, 99th(ms): 192, 99.9th(ms): 192 tpmC: 33453.0 - To proceed with the next workload, change the number of warehouses.

tiup bench tpcc --warehouses 5000 --host xxxxxxxxxxx.elb.us-west-2.amazonaws.com --threads 150 --time 10m run - Beginning at step 2, repeat this procedure for different numbers of warehouses.

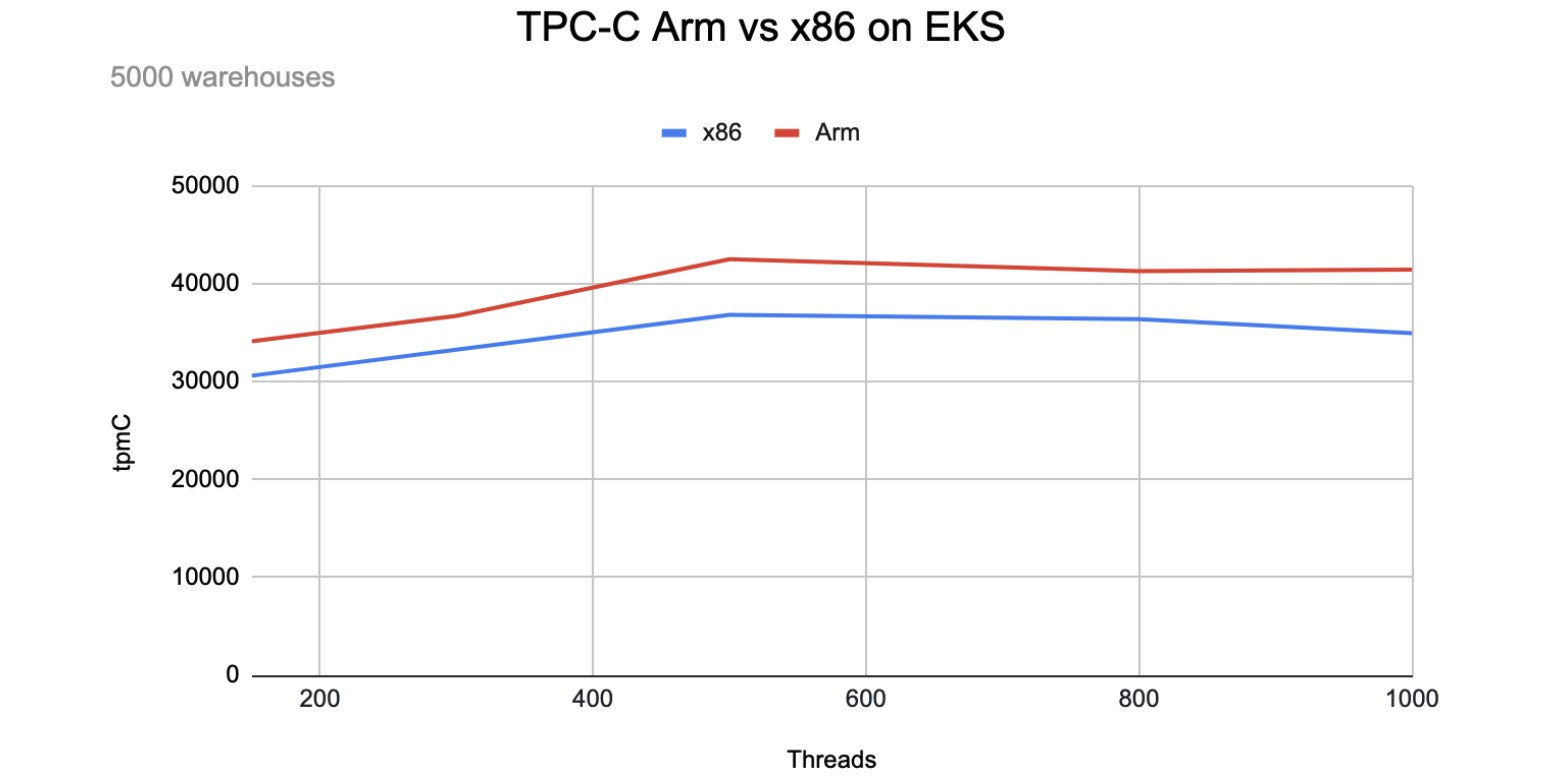

TPC-C benchmark results

The following table shows the results for a large1 workload:

| tpmC | 150 | 300 | 500 | 800 | 1000 |

| x86 |

30603.8 |

33270.4 |

36813.8 |

36388.6 |

34956.3 |

| Arm |

34114.3 |

36715.2 |

42493.1 |

41275.0 |

41426.9 |

| (Arm-x86)/x86 |

11.47% |

10.35% |

15.43% |

13.43% |

18.51% |

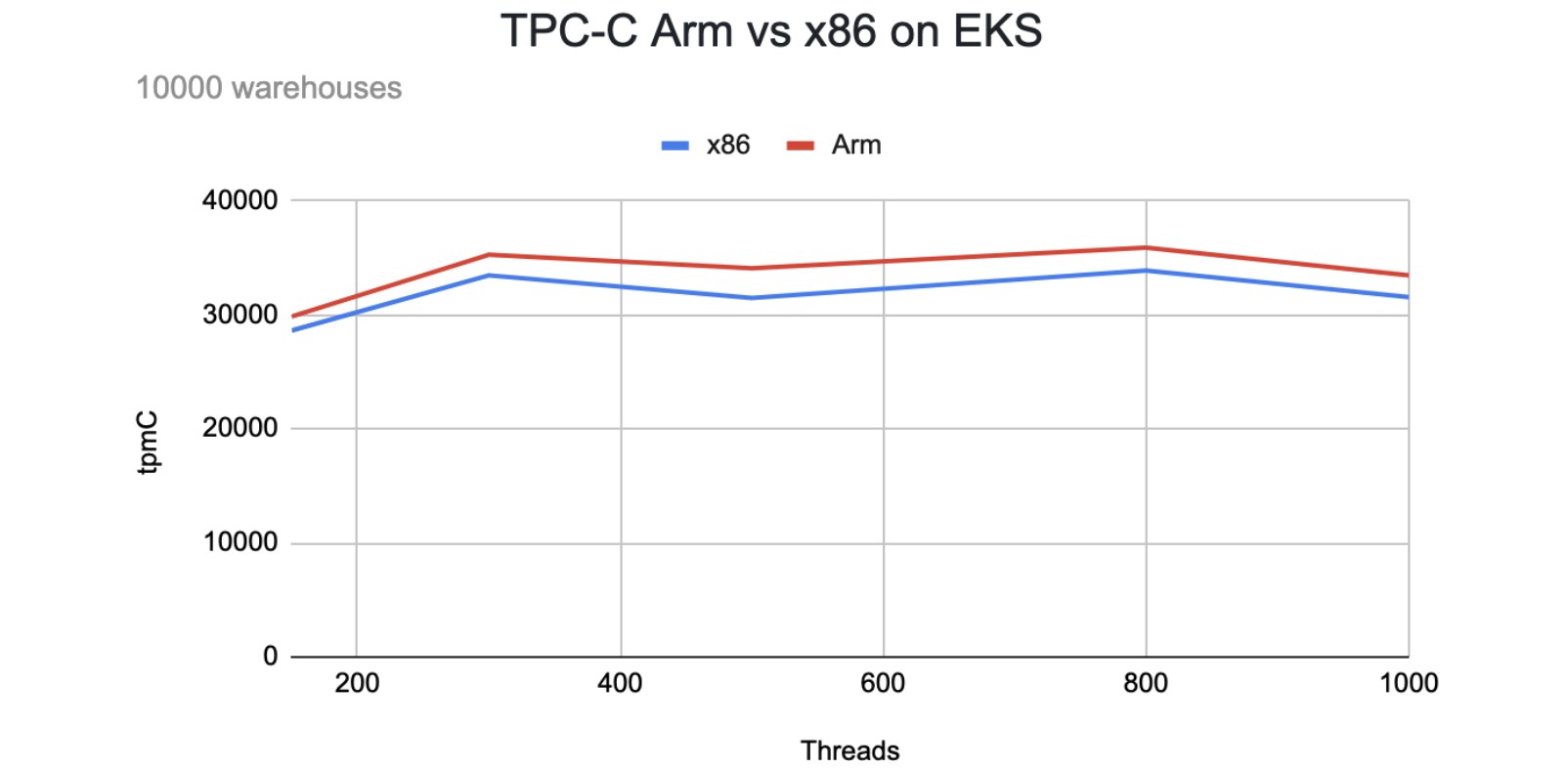

The following table shows the results for a large2 workload:

| tpmC | 150 | 300 | 500 | 800 | 1,000 |

| x86 |

28624.3 |

33464.3 |

31478.6 |

33892.9 |

31562.9 |

| Arm |

29858.5 |

35259.4 |

34088.0 |

35899.8 |

33453.0 |

| (Arm-x86)/x86 |

4.31% |

5.36% |

8.29% |

5.92% |

5.99% |

TPC-C price-performance ratio

In the following price-performance table:

- The tpmC values are derived from the average tpmC among 300, 500, and 800 threads.

- The total system costs for the US and APAC regions reflect the estimated five year hardware cost.

- The price-performance ratios compare x86 and Graviton2 Arm processors. A lower cost for more performance is better.

- All costs are in US dollars.

| Workload | tpmC | US region | APAC region | |||

| Total system cost | Price-performance | Total system cost | Price-performance | |||

| x86 | Large1 |

35,490.93 |

162,208.80 |

4.57 |

189,804.60 |

5.35 |

| Arm | Large1 |

40,161.10 |

138,539.40 |

3.45 |

161,807.40 |

4.03 |

| (Arm-x86)/x86 |

-24.52% |

-24.66% |

||||

| x86 | Large2 | 32,945.27 |

162,208.80 |

4.92 |

189,804.60 |

5.76 |

| Arm | Large2 | 35,082.40 |

138,539.40 |

3.95 |

161,807.40 |

4.61 |

| (Arm-x86)/x86 |

-19.79% |

-19.94% |

||||

As the following graphic shows, when we compare the absolute tpmC performance under each workload, the Arm-based system outperforms the x86-based system by 5%-15%. After we factor in the hardware cost, the Arm price-performance ratio is up to 25% lower than x86.

Sysbench

We will be using oltp_read_write.lua to test the performance for the OLTP workload.

Sysbench workloads

- Read (75%) and Write (25%)

- Table: 16

- Table size: 10 M rows per table

- Data size: around 100 GB

Sysbench test procedures

- Deploy the latest version of sysbench on the benchmark VM (c5.4xlarge).

- Set the sysbench configurations and save them in the configuration file. The following is a sample configuration file:

mysql-host={TIDB_HOST} mysql-port=4000 mysql-user=root mysql-password=password mysql-db=sbtest time=600 threads=8 # set to 8 while importing the data report-interval=10 db-driver=mysql - Before you import the data, it is necessary to make some settings to TiDB. Execute the following command in MySQL client:

set global tidb_disable_txn_auto_retry = off; - Use BR to import the prepared data in S3.

- Change the thread in config file to 300.

- Run the sysbench test:

sysbench --config-file=config oltp_read_write --tables=16 --table-size=10000000 run - Note the test results. The following are sample test results:

SQL statistics: queries performed: read: 10457930 write: 2966386 other: 1515584 total: 14939900 transactions: 746995 (2489.02 per sec.) queries: 14939900 (49780.45 per sec.) ignored errors: 0 (0.00 per sec.) reconnects: 0 (0.00 per sec.) General statistics: total time: 300.1146s total number of events: 746995 Latency (ms): min: 43.84 avg: 120.50 max: 381.92 95th percentile: 153.02 sum: 90013122.40 Threads fairness: events (avg/stddev): 2489.9833/235.76 execution time (avg/stddev): 300.0437/0.03 - Beginning at step 5, repeat this procedure and set the thread to 600 and 900.

Sysbench benchmark results

Results for 300, 600, and 900 threads are listed below. Since the QPS and TPS in sysbench are proportional, we will only compare the TPS in our test.

| Metrics | 300 | 600 | 900 |

| x86 P95 latency (ms) |

155.80 |

282.25 |

427.07 |

| Arm P95 latency (ms) |

147.61 |

267.41 |

383.33 |

| x86 QPS |

48814.94 |

52342.03 |

52413.17 |

| Arm QPS |

51892.58 |

55886.22 |

57465.90 |

| x86 TPS |

2440.75 |

2617.10 |

2620.66 |

| Arm TPS |

2594.63 |

2794.31 |

2873.30 |

| For TPS: (Arm-x86)/x86 |

6.30% |

6.77% |

9.64% |

Sysbench price-performance ratio

In the following price-performance table:

- TPS values are derived from the average TPS among 300, 600, and 900 threads.

- The total system cost reflects the estimated five year hardware cost. All costs are in US dollars.

- Price-performance compares x86 and Graviton2 Arm processors. A lower value is better. That indicates a lower cost for more performance.

| TPS | US region | APAC region | |||

| Total system cost | Price-performance | Total system cost | Price-performance | ||

| x86 |

2,559.50 |

162,208.80 |

63.38 |

189,804.60 |

74.16 |

| Arm |

2,754.08 |

138,539.40 |

50.30 |

161,807.40 |

58.75 |

| (Arm-x86)/x86 |

-20.64% |

-20.77% |

|||

Conclusion

Benchmarking results from both TPC-C and sysbench have shown that the Graviton2 processor has better performance compared to the x86 processor. In some cases, Graviton2 outperforms x86 by up to 18%. After factoring in the hardware cost, the Graviton2 processor has a better price-performance ratio than the x86—on average 20% lower. The results are only based on the workloads (100 GB, 500 GB, 1 TB) we tested. For limitations and future plans on the benchmark, please refer to AWS Startups Blog.

TiDB Dedicated

A fully-managed cloud DBaaS for predictable workloads

TiDB Serverless

A fully-managed cloud DBaaS for auto-scaling workloads